Hyperspatial

An AI canvas for thinking.

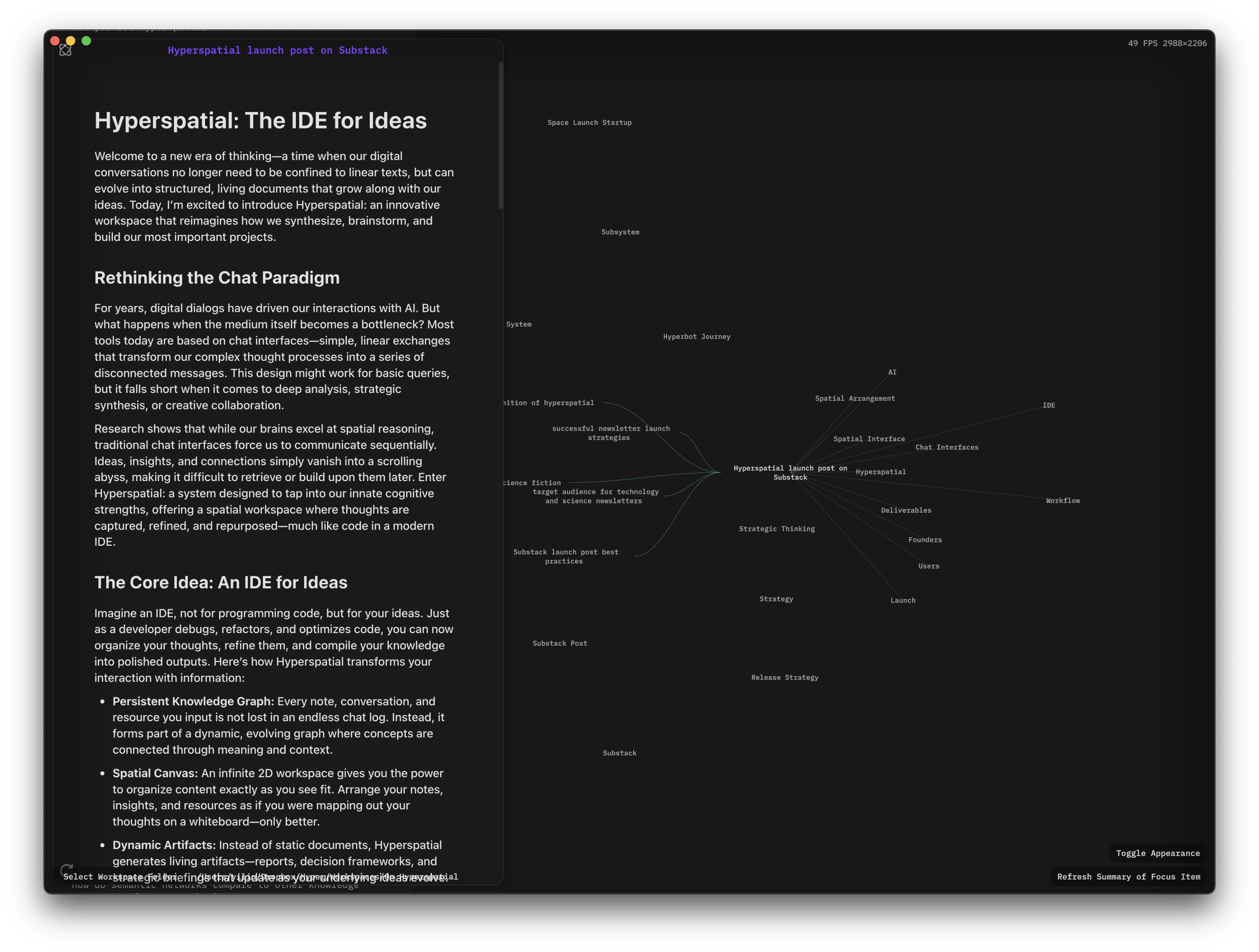

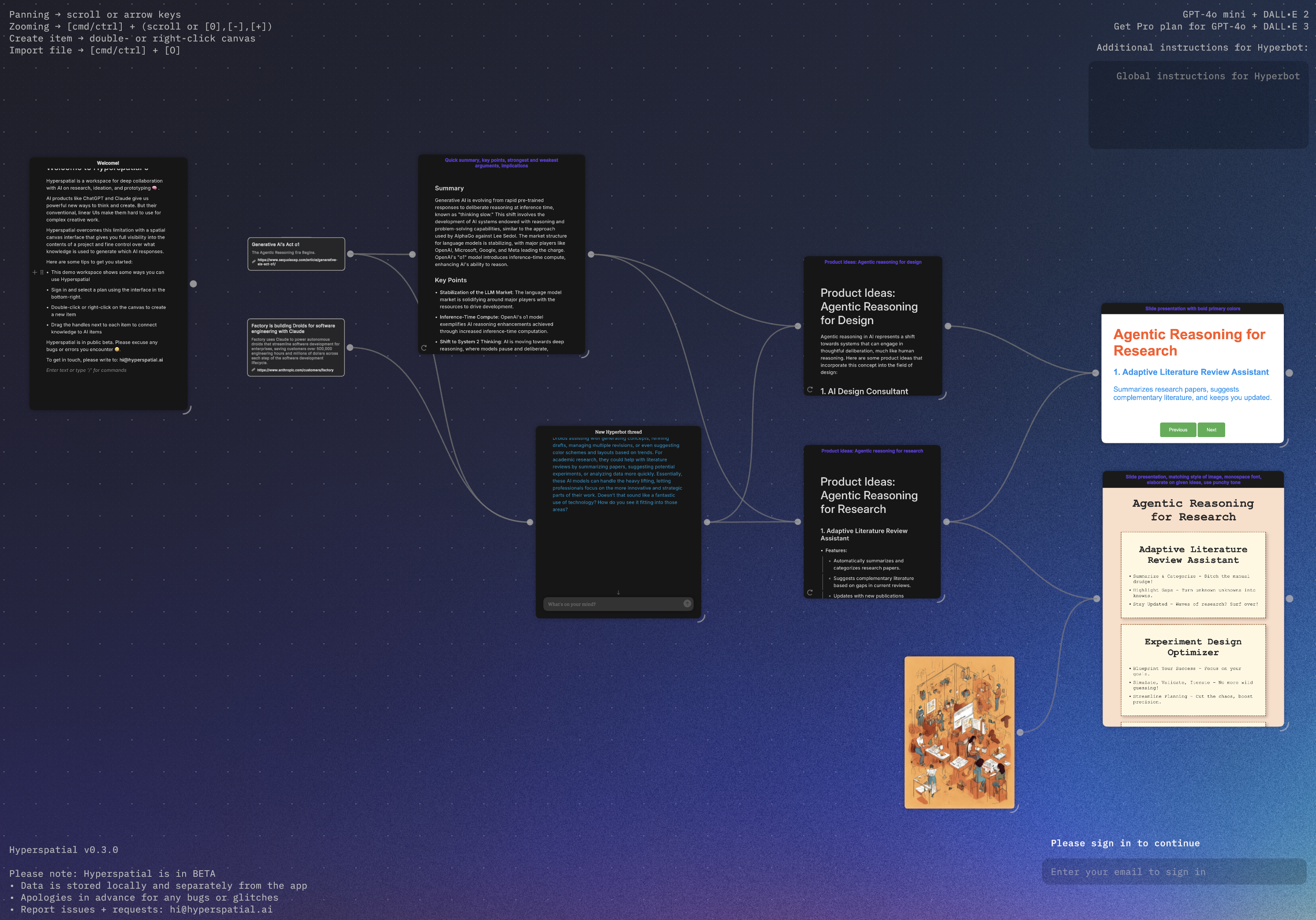

Hyperspatial was a series of prototypes exploring what an AI-native thinking environment could look like beyond the chat window. The idea drew from two sources: a frustration with the linearity of chat-based LLM interfaces, and intuitions carried over from years of building spatial computing tools. If spatial layouts could make complex information more tractable in AR, could the same principle work for working with language models on a flat screen?

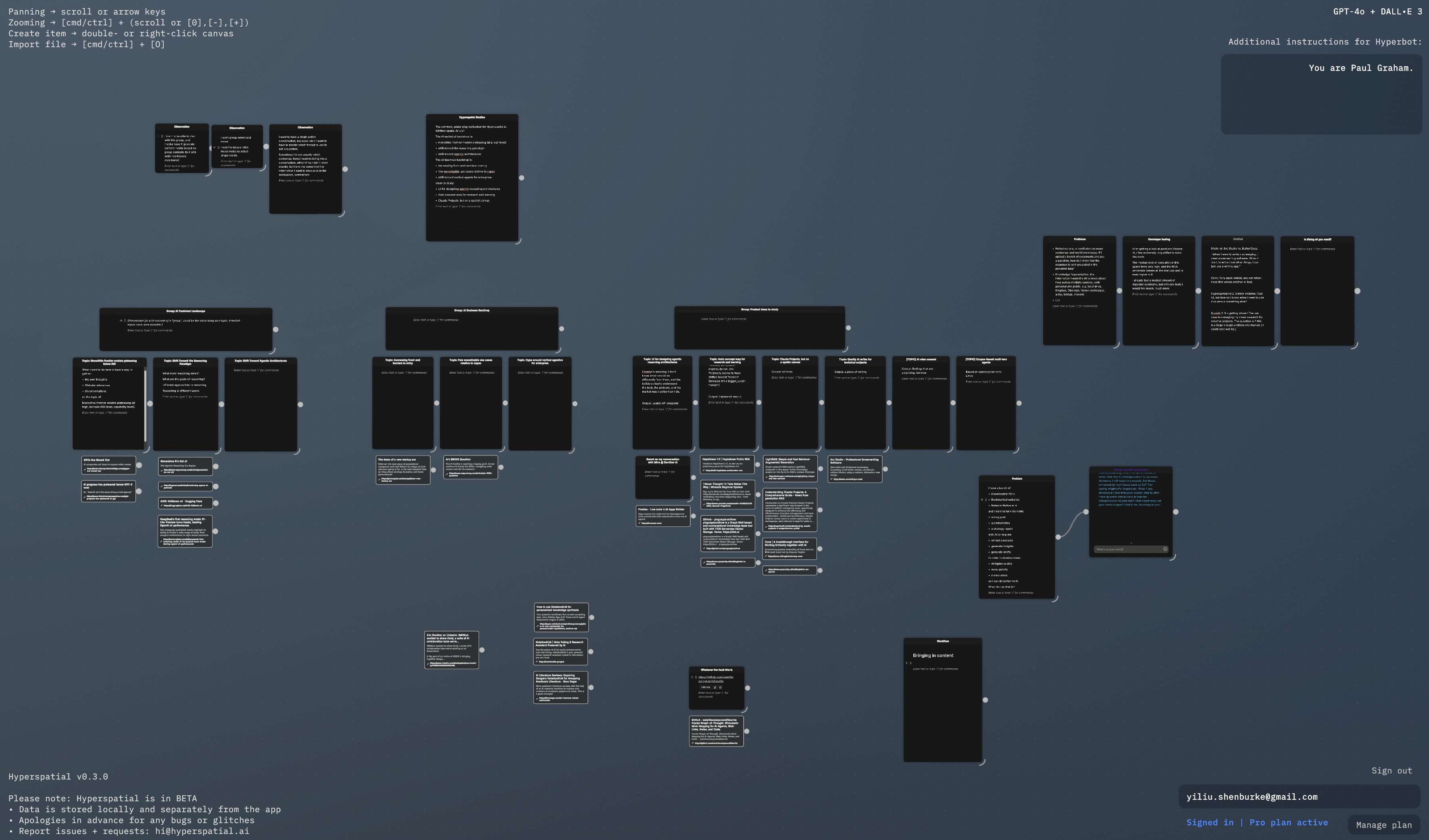

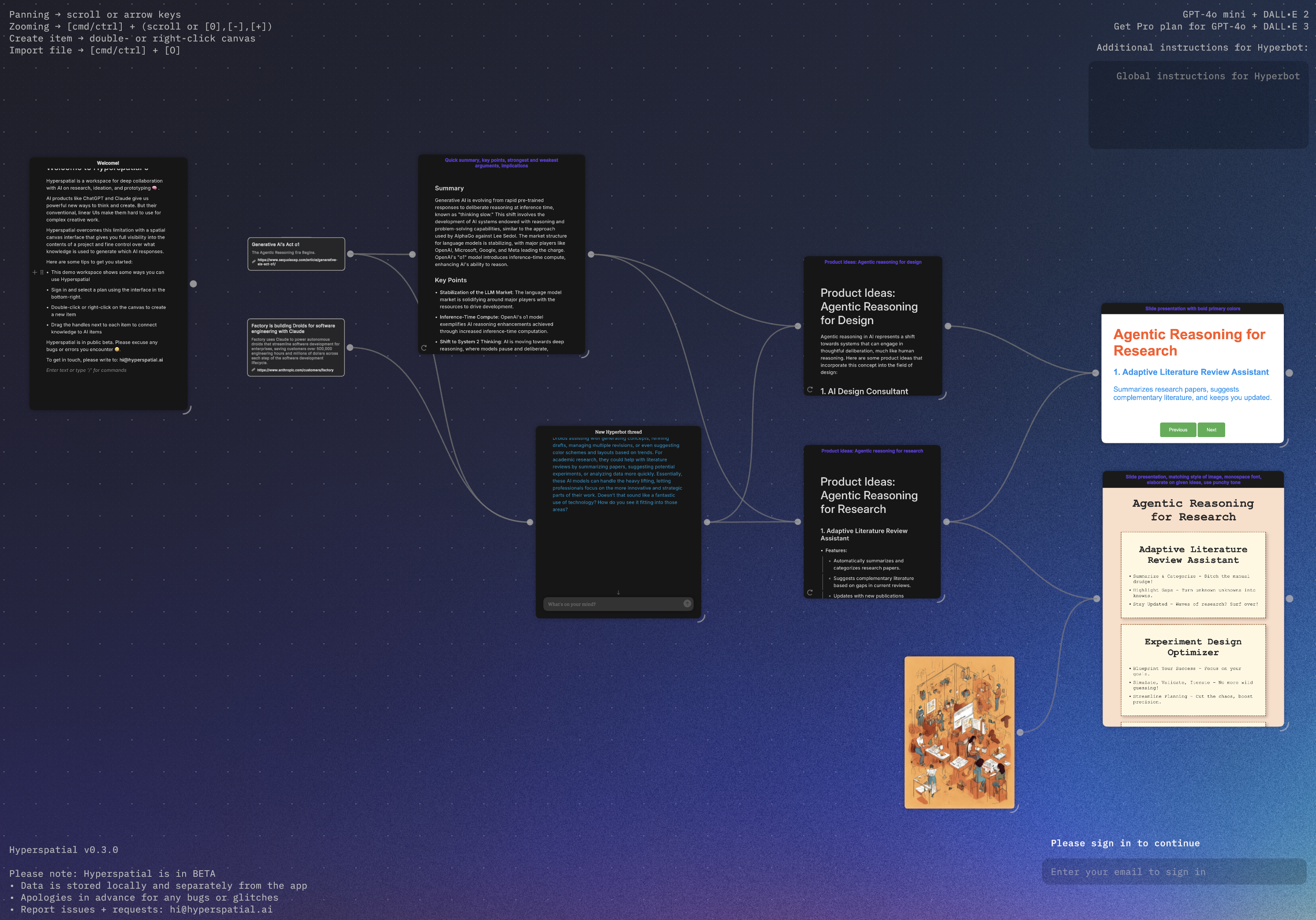

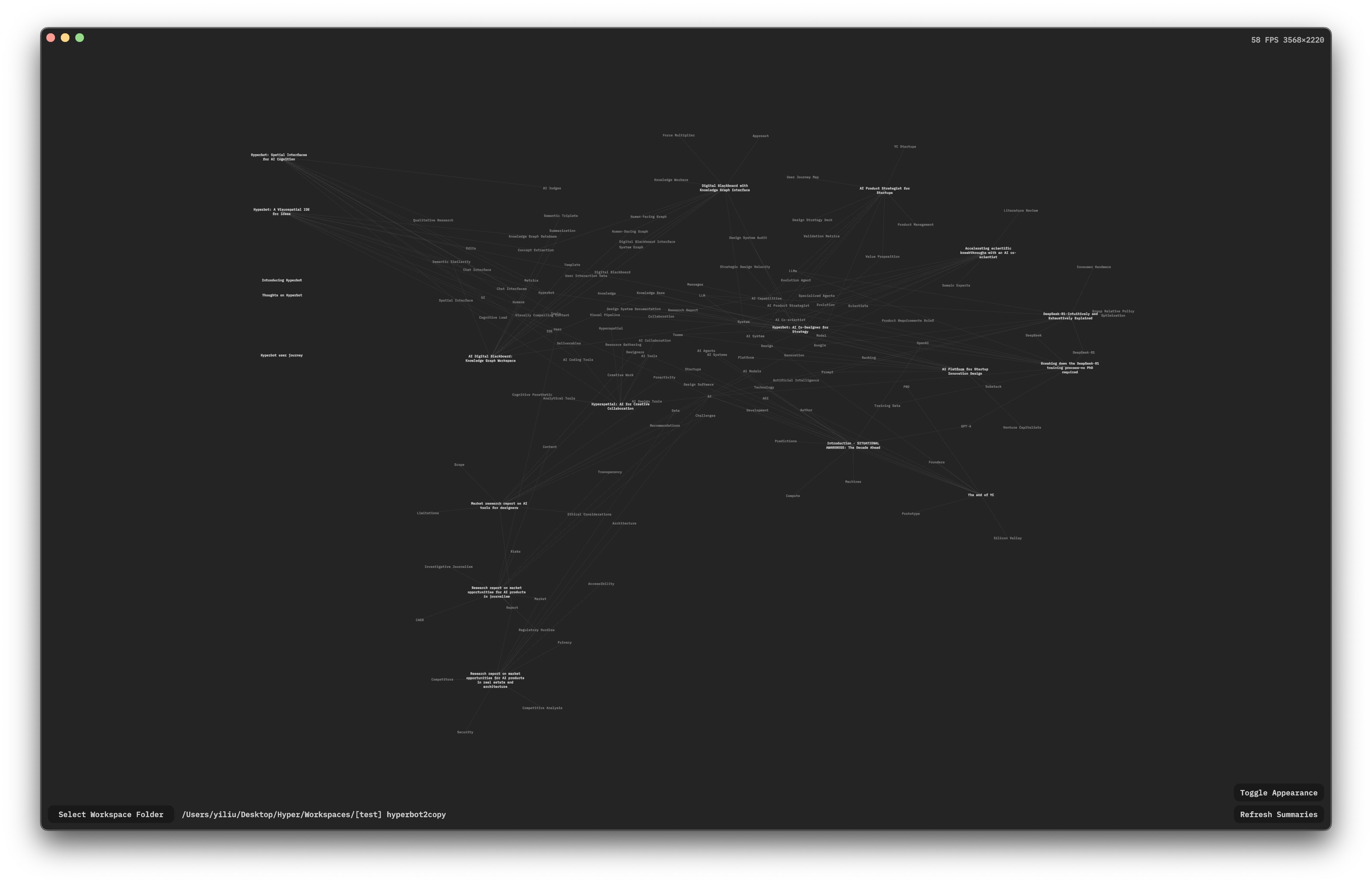

The earliest version was, hilariously, a Unity/C# app — the stack I knew best from years of AR development. It was a canvas where different item types — text, images, web bookmarks — could be created and piped into AI-generated outputs: new text blocks, DALL-E images, even primitive generated websites. Later iterations moved to the web and experimented with dynamic layouts driven by input/output relations and semantic clustering, eventually evolving into a native Mac app with a knowledge graph visualization and a side-by-side document editor.

Hyperspatial never shipped as a product, but it was a productive vehicle for learning. It was where I first developed real fluency in LLM engineering, context engineering, and the economics of AI product development — lessons that directly inform my current work.